Yesterday's Rubik's Cube solving robot is a good starting point to raise the question of whether or not it is possible to model intelligence to such a fine degree that the model could be considered intelligent itself.

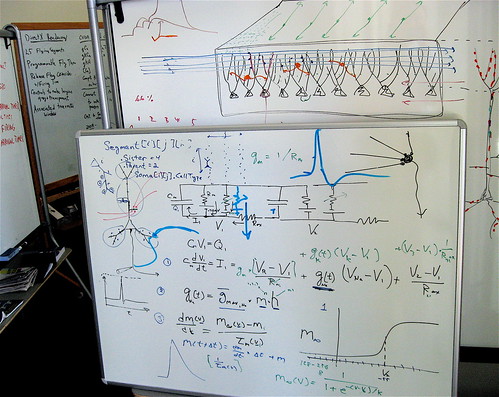

This picture of a mental model linked to from Steve Jurvetson's Flickr photostream, he owns the picture; made available under a creative commons license, some rights reserved.

There is a thought experiment called the Chinese Room. It is meant to prove that even if you could model a consciousness to a degree indistinguishable from actual conscious behavior, it still wouldn't actually be conscious, it would be just an automatic process.

The argument goes like this: Imagine you know nothing of the Chinese language (any of them, take your pick), written or spoken. You are placed into a box with 2 openings. Through one opening, pieces of paper with indecipherable squiggles on them are inserted. You then take these squiggles, compare them with a vast library of rules as to what squiggles to put on another piece of paper based on what you find on the first piece of paper. You then slide this second squiggled-up paper out the other opening. You've probably guessed it by now; to an outside Chinese speaking interlocutor, the box appears to be responding correctly to questions posed to it in Chinese. It appears the box understands Chinese. You, however, inside that box don't understand it at all, you're just following instructions. You have no consciousness, no awareness of what the conversation is about, or even really that you're facilitating a conversation at all. It could be anything. It's a meaningless activity to you.

Poor Rubot doesn't actually solve the cube puzzle. It knows not what it does. There is no consciousness there.

Leaving aside the response that for a system to behave indistinguishable from a conscious person so well as to fool other conscious people, it would need to do far more than simple return rules-based responses, there is another objection I've been thinking about.

It's true that you inside the box do not comprehend the conversation, but, in a way, the box really does. The box as a system understands. In the thought experiment, you are deliberately being placed in the role of something like a neuron... not in the role of the interpreter of neural activity. The interpreter, the consciousness, in this experiment is the set of rules. All you are doing is delivering stimuli to the rule-set, and returning output from the rule-set to the world.

So, is consciousness a rule-set? I don't know. Maybe something like that. Is that what we are, that thing we are referring to when we say "I want this" or I'm going there"... the I inside our heads? Is that, in the end, a rule set, partially built in conception and then elaborated through experience?

Evidence seems to suggest something like this is true.

All thought is action. All action is in some way reaction. Maybe our personhood is a really elaborate set of rules for interpreting stimuli that build up in our meat-brains throughout our lives. If that were so, maybe we can attribute real consciousness to software that models conscious behavior so closely as to be indistinguishable from our consciousness. Just because it's not happening inside a human head doesn't mean in might not really be as aware as we are.

Tuesday, April 10, 2007

Inside the Chinese Room - Puzzle Solving

Posted by

Bill

at

1:46 PM

Labels:

Chinese Room,

Consciousness,

intelligence,

model,

Puzzle,

Rubik's Cube

![]()

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment